Between the ideaAnd the realityBetween the motionAnd the actFalls the Shadow– TS Eliot, The Hollow MenTry to leave out the part that readers tend to skip.– Elmore Leonard, 10 Rules for Writing

31 Network Simulations: ns-2¶

In previous chapters, especially 20 Dynamics of TCP, we have at times made simplifying assumptions about TCP Reno traffic. In the present chapter we will look at actual TCP behavior, through simulation, enabling us to explore the accuracy of some of these assumptions. The primary goal is to provide comparison between idealized TCP behavior and the often-messier real behavior; a secondary goal is perhaps to shed light on some of the issues involved in simulation. For example, in the discussion in 31.3 Two TCP Senders Competing of competition between TCP Reno connections with different RTTs, we address several technical issues that affect the relevance of simulation results.

Parts of this chapter may serve as a primer on using the ns-2 simulator, though a primer focused on the goal of illuminating some of the basic operation and theory of TCP through experimentation. However, some of the outcomes described may be of interest even to those not planning on designing their own simulations.

Simulation is frequently used in the literature when comparing different TCP flavors for effective throughput (for example, the graphs excerpted in 22.15 TCP CUBIC were created through simulation). An alternative to simulation, network emulation, involves running actual system networking software in a multi-node environment created through containerization or virtualization; we present this in 30 Mininet. An important advantage of simulation over emulation, however, is that as emulations get large and complex they also get bogged down, and it can be hard to distinguish results from artifacts.

We begin this chapter by looking at a single connection and analyzing how well the TCP sawtooth utilizes the bottleneck link. We then turn to competition between two TCP senders. The primary competition example here is between TCP Reno connections with different RTTs. This example allows us to explore the synchronized-loss hypothesis (20.3.4 Synchronized-Loss Hypothesis) and to introduce phase effects, transient queue overflows, and other unanticipated TCP behavior. We also introduce some elements of designing simulation experiments. The second example compares TCP Reno and TCP Vegas. We close with a straightforward example of a wireless simulation.

31.1 The ns-2 simulator¶

The tool used for much research-level network simulations is ns, for network simulator and originally developed at the Information Sciences Institute. The ns simulator grew out of the REAL simulator developed by Srinivasan Keshav [SK88]; later development work was done by the Network Research Group at the Lawrence Berkeley National Laboratory.

We will describe in this chapter the ns-2 simulator, hosted at www.isi.edu/nsnam/ns. There is now also an ns-3 simulator, available at www.nsnam.org. Because ns-3 is not backwards-compatible with ns-2 and the programming interface has changed considerably, we take the position that ns-3 is an entirely different package, though one likely someday to supercede ns-2 entirely. While there is a short introduction to ns-3 in this book (32 The ns-3 Network Simulator), its use is arguably quite a bit more complicated for beginners, and the particular simulation examples presented below are well-suited to ns-2. While ns-3 supports more complex and realistic modeling, and is the tool of choice for serious research, this added complexity comes at a price in terms of configuration and programming. The standard ns-2 tracefile format is also quite easy to work with using informal scripting.

Research-level use of ns-2 often involves building new modules in C++, and compiling them in to the system. For our purposes here, the stock ns-2 distribution is sufficient. The simplest way to install ns-2 is probably with the “allinone” distribution, which does still require compiling but comes with a very simple install script. (As of 2014, the ns-allinone-2.35 version appeared to compile only with gcc/g++ no more recent than version 4.6.)

The native environment for ns-2 (and ns-3) is Linux. Perhaps the simplest approach for Windows users is to install a Linux virtual machine, and then install ns-2 under that. It is also possible to compile ns-2 under the Cygwin system; an older version of ns-2 may still be available as a Cygwin binary.

To create an ns-2 simulation, we need to do the following (in addition to a modest amount of standard housekeeping).

- define the network topology, including all nodes, links and router queuing rules

- create some TCP (or UDP) connections, called Agents, and attach them to nodes

- create some Applications – usually FTP for bulk transfer or telnet for intermittent random packet generation – and attach them to the agents

- start the simulation

Once started, the simulation runs for the designated amount of time, driven by the packets generated by the Application objects. As the simulated applications generate packets for transmission, the ns-2 system calculates when these packets arrive and depart from each node, and generates simulated acknowledgment packets as appropriate. Unless delays are explicitly introduced, node responses – such as forwarding a packet or sending an ACK – are instantaneous. That is, if a node begins sending a simulated packet from node N1 to N2 at time T=1.000 over a link with bandwidth 60 ms per packet and with propagation delay 200 ms, then at time T=1.260 N2 will have received the packet. N2 will then respond at that same instant, if a response is indicated, eg by enqueuing the packet or by forwarding it if the queue is empty.

Ns-2 does not necessarily require assigning IP addresses to every node, though this is possible in more elaborate simulations.

Advanced use of ns-2 (and ns-3) often involves the introduction of randomization; for example, we will in 31.3 Two TCP Senders Competing introduce both random sliding-windows delays and traffic generators that release packets at random times. While it is possible to seed the random-number generator so that different runs of the same experiment yield different outcomes, we will not do this here, so the random-number generator will always produce the same sequence. A consequence is that the same ns-2 script should yield exactly the same result each time it is run.

31.1.1 Using ns-2¶

The scripting interface for ns-2 uses the language Tcl, pronounced “tickle”; more precisely it is object-Tcl, or OTcl. For simple use, learning the general Tcl syntax is not necessary; one can proceed quite successfully by modifying standard examples.

The network simulation is defined in a Tcl file, perhaps sim.tcl; to run the simulation one then runs the command

ns sim.tcl

The result of running the ns-2 simulation will be to create some files, and perhaps print some output. The most common files created are the ns-2 trace file – perhaps sim.tr – which contains a record for every packet arrival, departure and queue event, and a file sim.nam for the network animator, nam, that allows visual display of the packets in motion (the ns-3 version of nam is known as NetAnim). The sliding-windows video in 8.2 Sliding Windows was created with nam.

Within Tcl, variables can be assigned using the set command. Expressions in [ ... ] are evaluated. Numeric expressions must also use the expr command:

set foo [expr $foo + 1]

As in unix-style shell scripting, the value of a variable X is $X; the name X (without the $) is used when setting the value (in Perl and PHP, on the other hand, many variable names begin with $, which is included both when evaluating and setting the variable). Comments are on lines beginning with the ‘#’ character. Comments can not be appended to a line that contains a statement (although it is possible first to start a new logical line with ;).

Objects in the simulation are generally created using built-in constructors; the constructor in the line below is the part in the square brackets (recall that the brackets must enclose an expression to be evaluated):

set tcp0 [new Agent/TCP/Reno]

Object attributes can then be assigned values; for example, the following sets the data portion of the packets in TCP connection tcp0 to 960 bytes:

$tcp0 set packetSize_ 960

Object attributes are retrieved using set without a value; the following assigns variable ack0 the current value of the ack_ field of tcp0:

set ack0 [$tcp0 set ack_]

The goodput of a TCP connection is, properly, the number of application bytes received. This differs from the throughput – the total bytes sent – in two ways: the latter includes both packet headers and retransmitted packets. The ack0 value above includes no retransmissions; we will occasionally refer to it as “goodput” in this sense.

31.2 A Single TCP Sender¶

For our first script we demonstrate a single sender sending through a router. Here is the topology we will build, with the delays and bandwidths:

The smaller bandwidth on the R–B link makes it the bottleneck. The default TCP packet size in ns-2 is 1000 bytes, so the bottleneck bandwidth is nominally 100 packets/sec or 0.1 packets/ms. The bandwidth×RTTnoLoad product is 0.1 packets/ms × 120 ms = 12 packets. Actually, the default size of 1000 bytes refers to the data segment, and there are an additional 40 bytes of TCP and IP header. We therefore set packetSize_ to 960 so the actual transmitted size is 1000 bytes; this makes the bottleneck bandwidth exactly 100 packets/sec.

We want the router R to have a queue capacity of 6 packets, plus the one currently being transmitted; we set queue-limit = 7 for this. We create a TCP connection between A and B, create an ftp sender on top that, and run the simulation for 20 seconds. The nodes A, B and R are named; the links are not.

The ns-2 default maximum window size is 20; we increase that to 100 with $tcp0 set window_ 100; otherwise we will see an artificial cap on the cwnd growth (in the next section we will increase this to 65000).

The script itself is in a file basic1.tcl, with the 1 here signifying a single sender.

# basic1.tcl simulation: A---R---B

#Create a simulator object

set ns [new Simulator]

#Open the nam file basic1.nam and the variable-trace file basic1.tr

set namfile [open basic1.nam w]

$ns namtrace-all $namfile

set tracefile [open basic1.tr w]

$ns trace-all $tracefile

#Define a 'finish' procedure

proc finish {} {

global ns namfile tracefile

$ns flush-trace

close $namfile

close $tracefile

exit 0

}

#Create the network nodes

set A [$ns node]

set R [$ns node]

set B [$ns node]

#Create a duplex link between the nodes

$ns duplex-link $A $R 10Mb 10ms DropTail

$ns duplex-link $R $B 800Kb 50ms DropTail

# The queue size at $R is to be 7, including the packet being sent

$ns queue-limit $R $B 7

# some hints for nam

# color packets of flow 0 red

$ns color 0 Red

$ns duplex-link-op $A $R orient right

$ns duplex-link-op $R $B orient right

$ns duplex-link-op $R $B queuePos 0.5

# Create a TCP sending agent and attach it to A

set tcp0 [new Agent/TCP/Reno]

# We make our one-and-only flow be flow 0

$tcp0 set class_ 0

$tcp0 set window_ 100

$tcp0 set packetSize_ 960

$ns attach-agent $A $tcp0

# Let's trace some variables

$tcp0 attach $tracefile

$tcp0 tracevar cwnd_

$tcp0 tracevar ssthresh_

$tcp0 tracevar ack_

$tcp0 tracevar maxseq_

#Create a TCP receive agent (a traffic sink) and attach it to B

set end0 [new Agent/TCPSink]

$ns attach-agent $B $end0

#Connect the traffic source with the traffic sink

$ns connect $tcp0 $end0

#Schedule the connection data flow; start sending data at T=0, stop at T=10.0

set myftp [new Application/FTP]

$myftp attach-agent $tcp0

$ns at 0.0 "$myftp start"

$ns at 10.0 "finish"

#Run the simulation

$ns run

After running this script, there is no command-line output (because we did not ask for any); however, the files basic1.tr and basic1.nam are created. Perhaps the simplest thing to do at this point is to view the animation with nam, using the command nam basic1.nam.

In the animation we can see slow start at the beginning, as first one, then two, then four and then eight packets are sent. A little past T=0.7, we can see a string of packet losses. This is visible in the animation as a tumbling series of red squares from the top of R’s queue. After that, the TCP sawtooth takes over; we alternate between the cwnd linear-increase phase (congestion avoidance), packet loss, and threshold slow start. During the linear-increase phase the bottleneck link is at first incompletely utilized; once the bottleneck link is saturated the router queue begins to build.

31.2.1 Graph of cwnd v time¶

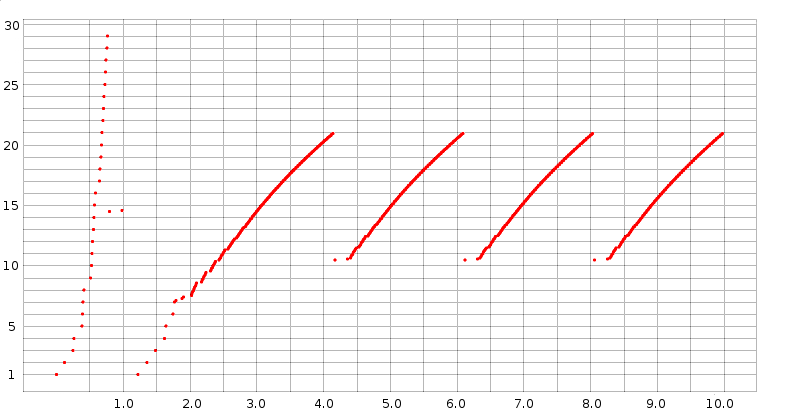

Here is a graph of cwnd versus time, prepared (see below) from data in the trace file basic1.tr:

Slow start is at the left edge. Unbounded slow start runs until about T=0.75, when a timeout occurs; bounded slow start runs from about T=1.2 to T=1.8. After that, all losses have been handled with fast recovery (we can tell this because cwnd does not drop below half its previous peak). The first three teeth following slow start have heights (cwnd peak values) of 20.931, 20.934 and 20.934 respectively; when the simulation is extended to 1000 seconds all subsequent peaks have exactly the same height, cwnd = 20.935. The spacing between the peaks is also constant, 1.946 seconds.

Because cwnd is incremented by ns-2 after each arriving ACK as described in 19.2.1 TCP Reno Per-ACK Responses, during the linear-increase phase there are a great many data points jammed together; the bunching effect is made stronger by the choice here of a large-enough dot size to make the slow-start points clearly visible. This gives the appearance of continuous line segments. Upon close examination, these line segments are slightly concave, as discussed in 22.5 Highspeed TCP, due to the increase in RTT as the queue fills. Individual flights of packets can just be made out at the lower-left end of each tooth, especially the first.

31.2.2 The Trace File¶

To examine the simulation (or, for that matter, the animation) more quantitatively, we turn to a more detailed analysis of the trace file, which contains records for all packet events plus (because it was requested) variable-trace information for cwnd_, ssthresh_, ack_ and maxseq_; these were the variables for which we requested traces in the basic1.tcl file above.

The bulk of the trace-file lines are event records; three sample records are below. (These are in the default event-record format for point-to-point links; ns-2 has other event-record formats for wireless. See use-newtrace in 31.6 Wireless Simulation below.)

r 0.58616 0 1 tcp 1000 ------- 0 0.0 2.0 28 43

+ 0.58616 1 2 tcp 1000 ------- 0 0.0 2.0 28 43

d 0.58616 1 2 tcp 1000 ------- 0 0.0 2.0 28 43

The twelve event-record fields are as follows:

- r for received, d for dropped, + for enqueued, - for dequeued. Every arriving packet is enqueued, even if it is immediately dequeued. The third packet above was the first dropped packet in the entire simulation.

- the time, in seconds.

- the number of the sending node, in the order of node definition and starting at 0. If the first field was “+”, “-” or “d”, this is the number of the node doing the enqueuing, dequeuing or dropping. Events beginning with “-” represent this node sending the packet.

- the number of the destination node. If the first field was “r”, this record represents the packet’s arrival at this node.

- the protocol.

- the packet size, 960 bytes of data (as we requested) plus 20 of TCP header and 20 of IP header.

- some TCP flags, here represented as “

-------” because none of the flags are set. Flags include E and N for ECN and A for reduction in the advertised winsize. - the flow ID. Here we have only one: flow 0. This value can be set via the

fid_variable in the Tcl source file; an example appears in the two-sender version below. The same flow ID is used for both directions of a TCP connection. - the source node (0.0), in form (node . connectionID). ConnectionID numbers here are simply an abstraction for connection endpoints; while they superficially resemble port numbers, the node in question need not even simulate IP, and each connection has a unique connectionID at each end. ConnectionID numbers start at 0.

- the destination node (2.0), again with connectionID.

- the packet sequence number as a TCP packet, starting from 0.

- a packet identifier uniquely identifying this packet throughout the simulation; when a packet is forwarded on a new link it keeps its old sequence number but gets a new packet identifier.

The three trace lines above represent the arrival of packet 28 at R, the enqueuing of packet 28, and then the dropping of the packet. All these happen at the same instant.

Mixed in with the event records are variable-trace records, indicating a particular variable has been changed. Here are two examples from t=0.3833:

0.38333 0 0 2 0 ack_ 3

0.38333 0 0 2 0 cwnd_ 5.000

The format of these lines is

- time

- source node of the flow

- source port (as above, an abstract connection endpoint, not a simulated TCP port)

- destination node of the flow

- destination port

- name of the traced variable

- value of the traced variable

The two variable-trace records above are from the instant when the variable cwnd_ was set to 5. It was initially 1 at the start of the simulation, and was incremented upon arrival of each of ack0, ack1, ack2 and ack3. The first line shows the ack counter reaching 3 (that is, the arrival of ack3); the second line shows the resultant change in cwnd_.

The graph above of cwnd v time was made by selecting out these cwnd_ lines and plotting the first field (time) and the last. (Recall that during the linear-increase phase cwnd is incremented by 1.0/cwnd with each arriving new ACK.)

The last ack in the tracefile is

9.98029 0 0 2 0 ack_ 808

Since ACKs started with number 0, this means we sent 809 packets successfully. The theoretical bandwidth was 100 packets/sec × 10 sec = 1000 packets, so this is about an 81% goodput. Use of the ack_ value this way tells us how much data was actually delivered. An alternative statistic is the final value of maxseq_ which represents the number of distinct packets sent; the last maxseq_ line is

9.99029 0 0 2 0 maxseq_ 829

As can be seen from the cwnd-v-time graph above, slow start ends around T=2.0. If we measure goodput from then until the end, we do a little better than 81%. The first data packet sent after T=2.0 is at 2.043184; it is data packet 72. 737 packets are sent from packet 72 until packet 808 at the end; 737 packets in 8.0 seconds is a goodput of 92%.

It is not necessary to use the tracefile to get the final values of TCP variables such as ack_ and maxseq_; they can be printed from within the Tcl script’s finish() procedure. The following example illustrates this, where ack_ and maxseq_ come from the connection tcp0. The global line lists global variables that are to be made available within the body of the procedure; tcp0 must be among these.

proc finish {} {

global ns nf f tcp0

$ns flush-trace

close $namfile

close $tracefile

set lastACK [$tcp0 set ack_]

set lastSEQ [$tcp0 set maxseq_]

puts stdout "final ack: $lastACK, final seq num: $lastSEQ"

exit 0

}

For TCP sending agents, useful member variables to set include:

class_: the identifying number of a flowwindow_: the maximum window size; the default is much too small.packetSize_: we set this to 960 above so the total packet size would be 1000.

Useful member variables either to trace or to print at the simulation’s end include:

maxseq_: the number of the last packet sent, starting at 1 for data packetsack_: the number of the last ACK receivedcwnd_: the current value of the congestion windownrexmitpack_: the number of retransmitted packets

To get a count of the data actually received, we need to look at the TCPsink object, $end0 above. There is no packet counter here, but we can retrieve the value bytes_ representing the total number of bytes received. This will include 40 bytes from the threeway handshake which can either be ignored or subtracted:

set ACKed [expr round ( [$end0 set bytes_] / 1000.0)]

This is a slightly better estimate of goodput. In very long simulations, however, this (or any other) byte count will wrap around long before any of the packet counters wrap around.

In the example above every packet event was traced, a consequence of the line

$ns trace-all $trace

We could instead have asked only to trace particular links. For example, the following line would request tracing for the bottleneck (R⟶B) link:

$ns trace-queue $R $B $trace

This is often useful when the overall volume of tracing is large, and we are interested in the bottleneck link only. In long simulations, full tracing can increase the runtime 10-fold; limiting tracing only to what is actually needed can be quite important.

31.2.3 Single Losses¶

By examining the basic1.tr file above for packet-drop records, we can confirm that only a single drop occurs at the end of each tooth, as was argued in 19.8 Single Packet Losses. After slow start finishes at around T=2, the next few drops are at T=3.963408, T=5.909568 and T=7.855728. The first of these drops is of Data[254], as is shown by the following record:

d 3.963408 1 2 tcp 1000 ------- 0 0.0 2.0 254 531

Like most “real” implementations, the ns-2 implementation of TCP increments cwnd (cwnd_ in the tracefile) by 1/cwnd on each new ACK (19.2.1 TCP Reno Per-ACK Responses). An additional packet is sent by A whenever cwnd is increased this way past another whole number; that is, whenever floor(cwnd) increases. At T=3.95181, cwnd_ was incremented to 20.001, triggering the double transmission of Data[253] and the doomed Data[254]. At this point the RTT is around 190 ms.

The loss of Data[254] is discovered by Fast Retransmit when the third dupACK[253] arrives. The first ACK[253] arrives at A at T=4.141808, and the dupACKs arrive every 10 ms, clocked by the 10 ms/packet transmission rate of R. Thus, A detects the loss at T=4.171808; at this time we see cwnd_ reduced by half to 10.465; the tracefile times for variables are only to 5 decimal places, so this is recorded as

4.17181 0 0 2 0 cwnd_ 10.465

That represents an elapsed time from when Data[254] was dropped of 207.7 ms, more than one RTT. As described in 19.8 Single Packet Losses, however, A stopped incrementing cwnd_ when the first ACK[253] arrived at T=4.141808. The value of cwnd_ at that point is only 20.931, not quite large enough to trigger transmission of another back-to-back pair and thus eventually a second packet drop.

31.2.4 Reading the Tracefile in Python¶

Deeper analysis of ns-2 data typically involves running some sort of script on the tracefiles; we will mostly use the Python (python3) language for this, although the awk language is also traditional. The following is the programmer interface to a simple module (library) nstrace.py:

- nsopen(filename): opens the tracefile

- isEvent(): returns

trueif the current line is a normal ns-2 event record - isVar(): returns

trueif the current line is an ns-2 variable-trace record - isEOF(): returns

trueif there are no more tracefile lines - getEvent(): returns a twelve-element tuple of the ns-2 event-trace values, each cast to the correct type. The ninth and tenth values, which are node.port pairs in the tracefile, are returned as (node port) sub-tuples.

- getVar(): returns a seven-element tuple of ns-2 variable-trace values

- skipline(): skips the current line (useful if we are interested only in event records, or only in variable-trace records, and want to ignore the other type of record)

We will first make use of this in 31.2.6.1 Link utilization measurement; see also 31.4 TCP Loss Events and Synchronized Losses. The nstrace.py file above includes regular-expression checks to verify that each tracefile line has the correct format, but, as these are slow, they are disabled by default. Enabling these checks is potentially useful, however, if some wireless trace records are also included.

31.2.5 The nam Animation¶

Let us now re-examine the nam animation, in light of what can be found in the trace file.

At T=0.120864, the first 1000-byte data packet is sent (at T=0 a 40-byte SYN packet is sent); the actual packet identification number is 1 so we will refer to it as Data[1]. At this point cwnd_ = 2, so Data[2] is enqueued at this same time, and sent at T=0.121664 (the delay exactly matches the A–R link’s bandwidth of 8000 bits in 0.0008 sec). The first loss occurs at T=0.58616, of Data[28]; at T=0.59616 Data[30] is lost. (Data[29] was not lost because R was able to send a packet and thus make room).

From T=.707392 to T=.777392 we begin a string of losses: packets 42, 44, 46, 48, 50, 52, 54 and 56.

At T=0.76579 the first ACK[27] makes it back to A. The first dupACK[27] arrives at T=0.77576; another arrives at T=0.78576 (10 ms later, exactly the bottleneck per-packet time!) and the third dupACK arrives at T=0.79576. At this point, Data[28] is retransmitted and cwnd is halved from 29 to 14.5.

At T=0.985792, the sender receives ACK[29]. DupACK[29]’s are received at T=1.077024, T=1.087024, T=1.097024 and T=1.107024. Alas, this is TCP Reno, in Fast Recovery mode, and it is not implementing Fast Retransmit while Fast Recovery is going on (TCP NewReno in effect fixes this). Therefore, the connection experiences a hard timeout at T=1.22579; the last previous event was at T=1.107024. At this point ssthresh is set to 7 and cwnd drops to 1. Slow start is used up to ssthresh = 7, at which point the sender switches to the linear-increase phase.

31.2.6 Single-sender Throughput Experiments¶

According to the theoretical analysis in 19.7 TCP and Bottleneck Link Utilization, a queue size of close to zero should yield about a 75% bottleneck utilization, a queue size such that the mean cwnd equals the transit capacity should yield about 87.5%, and a queue size equal to the transit capacity should yield close to 100%. We now test this.

We first increase the per-link propagation times in the basic1.tcl simulation above to 50 and 100 ms:

$ns duplex-link $A $R 10Mb 50ms DropTail

$ns duplex-link $R $B 800Kb 100ms DropTail

The bottleneck link here is 800 kbps, or 100 kBps, or 10 ms/packet, so these propagation-delay changes mean a round-trip transit capacity of 30 packets (31 if we include the bandwidth delay at R). In the table below, we run the simulation while varying the queue-limit parameter from 3 to 30. The simulations run for 1000 seconds, to minimize the effect of slow start. Tracing is disabled to reduce runtimes. The “received” column gives the number of distinct packets received by B; if the link utilization were 100% then in 1,000 seconds B would receive 100,000 packets.

| queue_limit | received | utilization %, R→B |

|---|---|---|

| 3 | 79767 | 79.8 |

| 4 | 80903 | 80.9 |

| 5 | 83313 | 83.3 |

| 8 | 87169 | 87.2 |

| 10 | 89320 | 89.3 |

| 12 | 91382 | 91.4 |

| 16 | 94570 | 94.6 |

| 20 | 97261 | 97.3 |

| 22 | 98028 | 98.0 |

| 26 | 99041 | 99.0 |

| 30 | 99567 | 99.6 |

In ns-2, every arriving packet is first enqueued, even if it is immediately dequeued, and so queue-limit cannot actually be zero. A queue-limit of 1 or 2 gives very poor results, probably because of problems with slow start. The run here with queue-limit = 3 is not too far out of line with the 75% predicted by theory for a queue-limit close to zero. When queue-limit is 10, then cwnd will range from 20 to 40, and the link-unsaturated and queue-filling phases should be of equal length. This leads to a theoretical link utilization of about (75%+100%)/2 = 87.5%; our measurement here of 89.3% is in good agreement. As queue-limit continues to increase, the link utilization rapidly approaches 100%, again as expected.

31.2.6.1 Link utilization measurement¶

In the experiment above we estimated the utilization of the R→B link by the number of distinct packets arriving at B. But packet duplicate transmissions sometimes occur as well (see 31.2.6.4 Packets that are delivered twice); these are part of the R→B link utilization but are hard to estimate (nominally, most packets retransmitted by A are dropped by R, but not all).

If desired, we can get an exact value of the R→B link utilization through analysis of the ns-2 trace file. In this file R is node 1 and B is node 2 and our flow is flow 0; we look for all lines of the form

- time 1 2 tcp 1000-------0 0.0 2.0 x y

that is, with field1 = ‘-‘, field3 = 1, field4 = 2, field6 = 1000 and field8 = 0 (if we do not check field6=1000 then we count one extra packet, a simulated SYN). We then simply count these lines; here is a simple script to do this in Python using the nstrace module above:

#!/usr/bin/python3

import nstrace

import sys

def link_count(filename):

SEND_NODE = 1

DEST_NODE = 2

FLOW = 0

count = 0

nstrace.nsopen(filename)

while not nstrace.isEOF():

if nstrace.isEvent():

(event, time, sendnode, dest, dummy, size, dummy, flow, dummy, dummy, dummy, dummy) = nstrace.getEvent()

if (event == "-" and sendnode == SEND_NODE and dest == DEST_NODE and size >= 1000 and flow == FLOW):

count += 1

else:

nstrace.skipline()

print ("packet count:", count);

link_count(sys.argv[1])

For completeness, here is the same program implemented in the Awk scripting language.

BEGIN {count=0; SEND_NODE=1; DEST_NODE=2; FLOW=0}

$1 == "-" { if ($3 == SEND_NODE && $4 == DEST_NODE && $6 >= 1000 && $8 == FLOW) {

count++;

}

}

END {print count;}

31.2.6.2 ns-2 command-line parameters¶

Experiments where we vary one parameter, eg queue-limit, are facilitated by passing in the parameter value on the command line. For example, in the basic1.tcl file we can include the following:

set queuesize $argv

...

$ns queue-limit $R $B $queuesize

Then, from the command line, we can run this as follows:

ns basic1.tcl 5

If we want to run this simulation with parameters ranging from 0 to 10, a simple shell script is

queue=0

while [ $queue -le 10 ]

do

ns basic1.tcl $queue

queue=$(expr $queue + 1)

done

If we want to pass multiple parameters on the command line, we use lindex to separate out arguments from the $argv string; the first argument is at position 0 (in bash and awk scripts, by comparison, the first argument is $1). For two optional parameters, the first representing queuesize and the second representing endtime, we would use

if { $argc >= 1 } {

set queuesize [expr [lindex $argv 0]]

}

if { $argc >= 2 } {

set endtime [expr [lindex $argv 1]]

}

31.2.6.3 Queue utilization¶

In our previous experiment we examined link utilization when queue-limit was smaller than the bandwidth×delay product. Now suppose queue-limit is greater than the bandwidth×delay product, so the bottleneck link is essentially never idle. We calculated in 19.7 TCP and Bottleneck Link Utilization what we might expect as an average queue utilization. If the transit capacity is 30 packets and the queue capacity is 40 then cwndmax would be 70 and cwndmin would be 35; the queue utilization would vary from 70-30 = 40 down to 35-30 = 5, averaging around (40+5)/2 = 22.5.

Let us run this as an ns-2 experiment. As before, we set the A–R and R–B propagation delays to 50 ms and 100 ms respectively, making the RTTnoLoad 300 ms, for about 30 packets in transit. We also set the queue-limit value to 40. The simulation runs for 1000 seconds, enough, as it turns out, for about 50 TCP sawteeth.

At the end of the run, the following Python script maintains a running time-weighted average of the queue size. Because the queue capacity exceeds the total transit capacity, the queue is seldom empty.

#!/usr/bin/python3

import nstrace

import sys

def queuesize(filename):

QUEUE_NODE = 1

nstrace.nsopen(filename)

sum = 0.0

size= 0

prevtime=0

while not nstrace.isEOF():

if nstrace.isEvent(): # counting regular trace lines

(event, time, sendnode, dnode, proto, dummy, dummy, flow, dummy, dummy, seqno, pktid) = nstrace.getEvent()

if (sendnode != QUEUE_NODE): continue

if (event == "r"): continue

sum += size * (time -prevtime)

prevtime = time

if (event=='d'): size -= 1

elif (event=="-"): size -= 1

elif (event=="+"): size += 1

else:

nstrace.skipline()

print("avg queue=", sum/time)

queuesize(sys.argv[1])

The answer we get for the average queue size is about 23.76, which is in good agreement with our theoretical value of 22.5.

31.2.6.4 Packets that are delivered twice¶

Every dropped TCP packet is ultimately transmitted twice, but classical TCP theory suggests that relatively few packets are actually delivered twice. This is pretty much true once the TCP sawtooth phase is reached, but can fail rather badly during slow start.

The following Python script will count packets delivered two or more times. It uses a dictionary, COUNTS, which is indexed by sequence numbers.

#!/usr/bin/python3

import nstrace

import sys

def dup_counter(filename):

SEND_NODE = 1

DEST_NODE = 2

FLOW = 0

count = 0

COUNTS = {}

nstrace.nsopen(filename)

while not nstrace.isEOF():

if nstrace.isEvent():

(event, time, sendnode, dest, dummy, size, dummy, flow, dummy, dummy, seqno, dummy) = nstrace.getEvent()

if (event == "r" and dest == DEST_NODE and size >= 1000 and flow == FLOW):

if (seqno in COUNTS):

COUNTS[seqno] += 1

else:

COUNTS[seqno] = 1

else:

nstrace.skipline()

for seqno in sorted(COUNTS.keys()):

if (COUNTS[seqno] > 1): print(seqno, COUNTS[seqno])

dup_counter(sys.argv[1])

When run on the basic1.tr file above, it finds 13 packets delivered twice, with TCP sequence numbers 43, 45, 47, 49, 51, 53, 55, 57, 58, 59, 60, 61 and 62. These are sent the second time between T=1.437824 and T=1.952752; the first transmissions are at times between T=0.83536 and T=1.046592. If we look at our cwnd-v-time graph above, we see that these first transmissions occurred during the gap between the end of the unbounded slow-start phase and the beginning of threshold-slow-start leading up to the TCP sawtooth. Slow start, in other words, is messy.

31.2.6.5 Loss rate versus cwnd: part 1¶

If we run the basic1.tcl simulation above until time 1000, there are 94915 packets acknowledged and 512 loss events. This yields a loss rate of p = 512/94915 = 0.00539, and so by the formula of 21.2 TCP Reno loss rate versus cwnd we should expect the average cwnd to be about 1.225/√p ≃ 16.7. The true average cwnd is the number of packets sent divided by the elapsed time in RTTs, but as RTTs are not constant here (they get significantly longer as the queue fills), we turn to an approximation. From 31.2.1 Graph of cwnd v time we saw that the peak cwnd was 20.935; the mean cwnd should thus be about 3/4 of this, or 15.7. While not perfect, agreement here is quite reasonable.

See also 31.4.3 Loss rate versus cwnd: part 2.

31.3 Two TCP Senders Competing¶

Now let us create a simulation in which two TCP Reno senders compete for the bottleneck link, and see how fair an allocation each gets. According to the analysis in 20.3 TCP Reno Fairness with Synchronized Losses, this is really a test of the synchronized-loss hypothesis, and so we will also examine the ns-2 trace files for losses and loss responses. We will start with “classic” TCP Reno, but eventually also consider SACK TCP. Note that, in terms of packet losses in the immediate vicinity of any one queue-filling event, we can expect TCP Reno and SACK TCP to behave identically; they differ only in how they respond to losses.

The initial topology will be as follows (though we will very soon raise the bandwidths tenfold, though not the propagation delays):

Broadly speaking, the simulations here will demonstrate that the longer-delay B–D connection receives less bandwidth than the A–D connection, but not quite so much less as was predicted in 20.3 TCP Reno Fairness with Synchronized Losses. The synchronized-loss hypothesis increasingly fails as the B–R delay increases, in that the B–D connection begins to escape some of the packet-loss events experienced by the A–D connection.

We admit at the outset that we will not, however, obtain a quantitative answer to the question of bandwidth allocation. In fact, as we shall see, we run into some difficulties even formulating the proper question. In the course of developing the simulation, we encounter several potential problems:

- The two senders can become synchronized in an unfortunate manner

- When we resolve the previous issue by introducing randomness, the bandwidth division is sensitive to the method selected

- As R’s queue fills, the RTT may increase significantly, thus undermining RTT-based measurements (31.3.9 The RTT Problem)

- Transient queue spikes may introduce unexpected losses

- Coarse timeouts may introduce additional unexpected losses

The experiments and analyses below divide into two broad categories. In the first category, we make use only of the final goodput measurements for the two connections. We consider the first two points of the list above in 31.3.4 Phase Effects, and the third in 31.3.9 The RTT Problem and 31.3.10 Raising the Bandwidth. The first category concludes with some simple loss modeling in 31.3.10.1 Possible models.

In the second category, beginning at 31.4 TCP Loss Events and Synchronized Losses, we make use of the ns-2 tracefiles to extract information about packet losses and the extent to which they are synchronized. Examples related to points four and five of the list above are presented in 31.4.1 Some TCP Reno cwnd graphs. The second category concludes with 31.4.2 SACK TCP and Avoiding Loss Anomalies, in which we demonstrate that SACK TCP is, in terms of loss and recovery, much better behaved than TCP Reno.

31.3.1 The Tcl Script¶

Below is a simplified version of the ns-2 script for our simulation; the full version is at basic2.tcl. The most important variable is the additional one-way delay on the B–R link, here called delayB. Other defined variables are queuesize (for R’s queue_limit), bottleneckBW (for the R–D bandwidth), endtime (the length of the simulation), and overhead (for introducing some small degree of randomization, below). As with basic1.tcl, we set the packet size to 1000 bytes total (960 bytes TCP portion), and increase the advertised window size to 65000 (so it is never the limiting factor).

We have made the delayB value be a command-line parameter to the Tcl file, so we can easily experiment with changing it (in the full version linked to above, overhead, bottleneckBW, endtime and queuesize are also parameters). The one-way propagation delay for the A–D path is 10 ms + 100 ms = 110 ms, making the RTT 220 ms plus the bandwidth delays. At the bandwidths above, the bandwidth delay for data packets adds an additional 11 ms; ACKs contribute an almost-negligible 0.44 ms. We return to the script variable RTTNL, intended to approximate RTTnoLoad, below.

With endtime=300, the theoretical maximum number of data packets that can be delivered is 30,000. If bottleneckBW = 0.8 Mbps (100 packets/sec) then the R–D link can hold ten R⟶D data packets in transit, plus another ten D⟶R ACKs.

In the finish() procedure we have added code to print out the number of packets received by D for each connection; we could also extract this from the trace file.

To gain better control over printing, we have used the format command, which works something like C’s sprintf. It returns a string containing spliced-in numeric values replacing the corresponding %d or %f tokens in the control string; this returned string can then be printed with puts.

The full version linked to above also contains some nam directives, support for command-line arguments, and arranges to name any tracefiles with the same base filename as the Tcl file.

# NS basic2.tcl example of two TCPs competing on the same link.

# Create a simulator object

set ns [new Simulator]

#Open the trace file

set trace [open basic2.tr w]

$ns trace-all $trace

############## some globals (modify as desired) ##############

# queuesize on bottleneck link

set queuesize 20

# default run time, in seconds

set endtime 300

# "overhead" of D>0 introduces a uniformly randomized delay d, 0≤d≤D; 0 turns it off.

set overhead 0

# delay on the A--R link, in ms

set basedelay 10

# ADDITIONAL delay on the B--R link, in ms

set delayB 0

# bandwidth on the bottleneck link, either 0.8 or 8.0 Mbit

set bottleneckBW 0.8

# estimated no-load RTT for the first flow, in ms

set RTTNL 220

############## arrange for output ##############

set outstr [format "parameters: delayB=%f overhead=%f bottleneckBW=%f" $delayB $overhead $bottleneckBW]

puts stdout $outstr

# Define a 'finish' procedure that prints out progress for each connection

proc finish {} {

global ns tcp0 tcp1 end0 end1 queuesize trace delayB overhead RTTNL

set ack0 [$tcp0 set ack_]

set ack1 [$tcp1 set ack_]

# counts of packets *received*

set recv0 [expr round ( [$end0 set bytes_] / 1000.0)]

set recv1 [expr round ( [$end1 set bytes_] / 1000.0)]

# see numbers below in topology-creation section

set rttratio [expr (2.0*$delayB+$RTTNL)/$RTTNL]

# actual ratio of throughputs fast/slow; the 1.0 forces floating point

set actualratio [expr 1.0*$recv0/$recv1]

# theoretical ratio fast/slow with squaring; see text for discussion of ratio1 and ratio2

set rttratio2 [expr $rttratio*$rttratio]

set ratio1 [expr $actualratio/$rttratio]

set ratio2 [expr $actualratio/$rttratio2]

set outstr [format "%f %f %d %d %f %f %f %f %f" $delayB $overhead $recv0 $recv1 $rttratio $rttratio2 $actualratio $ratio1 $ratio2 ]

puts stdout $outstr

$ns flush-trace

close $trace

exit 0

}

############### create network topology ##############

# A

# \

# \

# R---D (Destination)

# /

# /

# B

#Create four nodes

set A [$ns node]

set B [$ns node]

set R [$ns node]

set D [$ns node]

set fastbw [expr $bottleneckBW * 10]

#Create links between the nodes; propdelay on B--R link is 10+$delayB ms

$ns duplex-link $A $R ${fastbw}Mb ${basedelay}ms DropTail

$ns duplex-link $B $R ${fastbw}Mb [expr $basedelay + $delayB]ms DropTail

# this last link is the bottleneck; 1000 bytes at 0.80Mbps => 10 ms/packet

# A--D one-way delay is thus 110 ms prop + 11 ms bandwidth

# the values 0.8Mb, 100ms are from Floyd & Jacobson

$ns duplex-link $R $D ${bottleneckBW}Mb 100ms DropTail

$ns queue-limit $R $D $queuesize

############## create and connect TCP agents, and start ##############

Agent/TCP set window_ 65000

Agent/TCP set packetSize_ 960

Agent/TCP set overhead_ $overhead

#Create a TCP agent and attach it to node A, the delayed path

set tcp0 [new Agent/TCP/Reno]

$tcp0 set class_ 0

# set the flowid here, used as field 8 in the trace

$tcp0 set fid_ 0

$tcp0 attach $trace

$tcp0 tracevar cwnd_

$tcp0 tracevar ack_

$ns attach-agent $A $tcp0

set tcp1 [new Agent/TCP/Reno]

$tcp1 set class_ 1

$tcp1 set fid_ 1

$tcp1 attach $trace

$tcp1 tracevar cwnd_

$tcp1 tracevar ack_

$ns attach-agent $B $tcp1

set end0 [new Agent/TCPSink]

$ns attach-agent $D $end0

set end1 [new Agent/TCPSink]

$ns attach-agent $D $end1

#Connect the traffic source with the traffic sink

$ns connect $tcp0 $end0

$ns connect $tcp1 $end1

#Schedule the connection data flow

set ftp0 [new Application/FTP]

$ftp0 attach-agent $tcp0

set ftp1 [new Application/FTP]

$ftp1 attach-agent $tcp1

$ns at 0.0 "$ftp0 start"

$ns at 0.0 "$ftp1 start"

$ns at $endtime "finish"

#Run the simulation

$ns run

31.3.2 Equal Delays¶

We first try this out by running the simulation with equal delays on both A–R and R–B. The following values are printed out (arranged here vertically to allow annotation)

| value | variable | meaning |

|---|---|---|

| 0.000000 | delayB |

Additional B–R propagation delay, compared to A–R delay |

| 0.000000 | overhead |

overhead; a value of 0 means this is effectively disabled |

| 14863 | recv0 |

Count of cumulative A–D packets received at D (that is, goodput) |

| 14771 | recv1 |

Count of cumulative B–D packets received at D (again, goodput) |

| 1.000000 | rttratio |

RTT_ratio: B–D/A–D (long/short) |

| 1.000000 | rttratio2 |

The square of the previous value |

| 1.006228 | actualratio |

Actual ratio of A–D/B–D goodput, that is, 14863/14771 (note change in order versus RTT_ratio) |

| 1.006228 | ratio1 |

actual_ratio/RTT_ratio |

| 1.006228 | ratio2 |

actual_ratio/RTT_ratio2 |

The one-way A–D propagation delay is 110 ms; the bandwidth delays as noted above amount to 11.44 ms, 10 ms of which is on the R–D link. This makes the A–D RTTnoLoad about 230 ms. The B–D delay is, for the time being, the same, as delayB = 0. We set RTTNL = 220, and calculate the RTT ratio (within Tcl, in the finish() procedure) as (2×delayB + RTTNL)/RTTNL. We really should use RTTNL=230 instead of 220 here, but 220 will be closer when we later change bottleneckBW to 8.0 Mbit/sec rather than 0.8, below. Either way, the difference is modest.

Note that the above RTT calculations are for when the queue at R is empty; when the queue contains 20 packets this adds another 200 ms to the A⟶D and B⟶D times (the reverse direction is unchanged). This may make a rather large difference to the RTT ratio, and we will address it below, but does not matter yet because the propagation delays so far are identical.

In the model of 20.3.3 TCP Reno RTT bias we explored a model in which we expect that ratio2, above, would be about 1.0. The final paragraph of 21.2.2 Unsynchronized TCP Losses hints at a possible model (the 𝛾=𝜆 case) in which ratio1 would be about 1.0. We will soon be in a position to test these theories experimentally. Note that the order of B and A in the goodput and RTT ratios is reversed, to reflect the expected inverse relationship.

In the 300-second run here, 14863+14771 = 29634 packets are sent. This means that the bottleneck link is 98.8% utilized.

In 31.4.1 Some TCP Reno cwnd graphs we will introduce a script (teeth.py) to count and analyze the teeth of the TCP sawtooth. Applying this now, we find there are 67 loss events total, and thus 67 teeth, and in every loss event each flow loses exactly one packet. This is remarkably exact conformance to the synchronized-loss hypothesis of 20.3.3 TCP Reno RTT bias. So far.

31.3.3 Unequal Delays¶

We now begin increasing the additional B–R delay (delayB). Some preliminary data are in the table below, and point to a significant problem: goodput ratios in the last column here are not varying smoothly. The value of 0 ms in the first row means that the B–R delay is equal to the A–R delay; the value of 110 ms in the last row means that the B–D RTTnoLoad is double the A–D RTTnoLoad. The column labeled (RTT ratio)2 is the expected goodput ratio, according to the model of 20.3 TCP Reno Fairness with Synchronized Losses; the actual A–D/B–D goodput ratio is in the final column.

| delayB | RTT ratio | A–D goodput | B–D goodput | (RTT ratio)2 | goodput ratio |

|---|---|---|---|---|---|

| 0 | 1.000 | 14863 | 14771 | 1.000 | 1.006 |

| 5 | 1.045 | 4229 | 24545 | 1.093 | 0.172 |

| 23 | 1.209 | 22142 | 6879 | 1.462 | 3.219 |

| 24 | 1.218 | 17683 | 9842 | 1.484 | 1.797 |

| 25 | 1.227 | 14958 | 13754 | 1.506 | 1.088 |

| 26 | 1.236 | 24034 | 5137 | 1.529 | 4.679 |

| 35 | 1.318 | 16932 | 11395 | 1.738 | 1.486 |

| 36 | 1.327 | 25790 | 3603 | 1.762 | 7.158 |

| 40 | 1.364 | 20005 | 8580 | 1.860 | 2.332 |

| 41 | 1.373 | 24977 | 4215 | 1.884 | 5.926 |

| 45 | 1.409 | 18437 | 10211 | 1.986 | 1.806 |

| 60 | 1.545 | 18891 | 9891 | 2.388 | 1.910 |

| 61 | 1.555 | 25834 | 3135 | 2.417 | 8.241 |

| 85 | 1.773 | 20463 | 8206 | 3.143 | 2.494 |

| 110 | 2.000 | 22624 | 5941 | 4.000 | 3.808 |

For a few rows, such as the first and the last, agreement between the last two columns is quite good. However, there are some decidedly anomalous cases in between (particularly the numbers in bold). As delayB changes from 35 to 36, the goodput ratio jumps from 1.486 to 7.158. Similar dramatic changes in goodput appear as delayB ranges through the sets {23, 24, 25, 26}, {40, 41, 45}, and {60, 61}. These values were, admittedly, specially chosen by trial and error to illustrate relatively discontinuous behavior of the goodput ratio, but, still, what is going on?

31.3.4 Phase Effects¶

This erratic behavior in the goodput ratio in the table above turns out to be due to what are called phase effects in [FJ92]; transmissions of the two TCP connections become precisely synchronized in some way that involves a persistent negative bias against one of them. What is happening is that a “race condition” occurs for the last remaining queue vacancy, and one connection consistently loses this race the majority of the time.

31.3.4.1 Single-sender phase effects¶

We begin by taking a more detailed look at the bottleneck queue when no competition is involved. Consider a single sender A using a fixed window size to send to destination B through bottleneck router R (so the topology is A–R–B), and suppose the window size is large enough that R’s queue is not empty. For the sake of definiteness, assume R’s bandwidth delay is 10 ms/packet; R will send packets every 10 ms, and an ACK will arrive back at A every 10 ms, and A will transmit every 10 ms.

Now imagine that we have an output meter that reports the percentage that has been transmitted of the packet R is currently sending; it will go from 0% to 100% in 10 ms, and then back to 0% for the next packet.

Our first observation is that at each instant when a packet from A fully arrives at R, R is always at exactly the same point in forwarding some earlier packet on towards B; the output meter always reads the same percentage. This percentage is called the phase of the connection, sometimes denoted 𝜙.

We can determine 𝜙 as follows. Consider the total elapsed time for the following:

- R finishes transmitting a packet and it arrives at B

- its ACK makes it back to A

- the data packet triggered by that ACK fully arrives at R

In the absence of other congestion, this R-to-R time includes no variable queuing delays, and so is constant. The output-meter percentage above is determined by this elapsed time. If for example the R-to-R time is 83 ms, and Data[N] leaves R at T=0, then Data[N+winsize] (sent by A upon arrival of ACK[N]) will arrive at R when R has completed sending packets up through Data[N+8] (80 ms) and is 3 ms into transmitting Data[N+9]. We can get this last as a percentage by dividing 83 by R’s 10-ms bandwidth delay and taking the fractional part (in this case, 30%). Because the elapsed R-to-R time here is simply RTTnoLoad minus the bandwidth delay at R, we can also compute the phase as

𝜙 = fractional_part(RTTnoLoad ÷ (R’s bandwidth delay)).

If we ratchet up the winsize until the queue becomes full when each new packet arrives, then the phase percentage represents the fraction of the time the queue has a vacancy. In the scenario above, if we start the clock at T=0 when R has finished transmitting a packet, then the queue has a vacancy until T=3 when a new packet from A arrives. The queue is then full until T=10, when R starts transmitting the next packet in its queue.

Finally, even in the presence of competition through R, the phase of a single connection remains constant provided there are no queuing delays along the bidirectional A–B path except at R itself, and there only in the forward direction towards B. Other traffic sent through R can only add delay in integral multiples of R’s bandwidth delay, and so cannot affect the A–B phase.

31.3.4.2 Two-sender phase effects¶

In the present simulation, we can by the remark in the previous paragraph calculate the phase for each sender; let these be 𝜙A and 𝜙B. The significance of phase to competition is that whenever A and B send packets that happen to arrive at R in the same 10-ms interval while R is forwarding some other packet, if the queue has only one vacancy then the connection with the smaller phase will always win it.

As a concrete example, suppose that the respective RTTnoLoad’s of A and B are 221 and 263 ms. Then A’s phase is 0.1 (fractional_part(221÷10)) and B’s is 0.3. The important thing is not that A’s packets take less time, but that in the event of a near-tie A’s packet must arrive first at R. Imagine that R forwards a B packet and then, four packets (40 ms) later, forwards an A packet. The ACKs elicited by these packets will cause new packets to be sent by A and B; A’s packet will arrive first at R followed 2 ms later by B’s packet. Of course, R is not likely to send an A packet four packets after every B packet, but when it does so, the arrival order is predetermined (in A’s favor) rather than random.

Now consider what happens when a packet is dropped. If there is a single vacancy at R, and packets from A and B arrive in a near tie as above, then it will always be B’s packet that is dropped. The occasional packet-pair sent by A or B as part of the expansion of cwnd will be the ultimate cause of loss events, but the phase effect has introduced a persistent degree of bias in A’s favor.

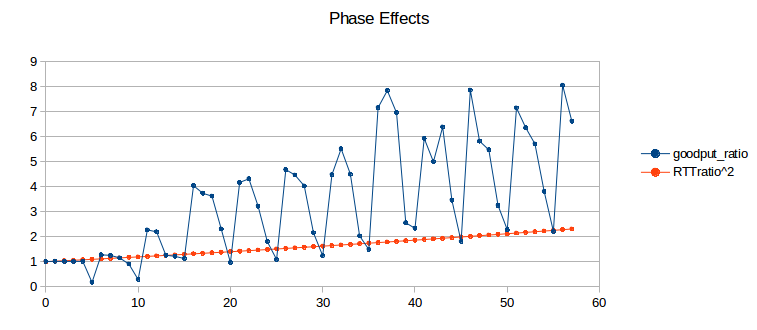

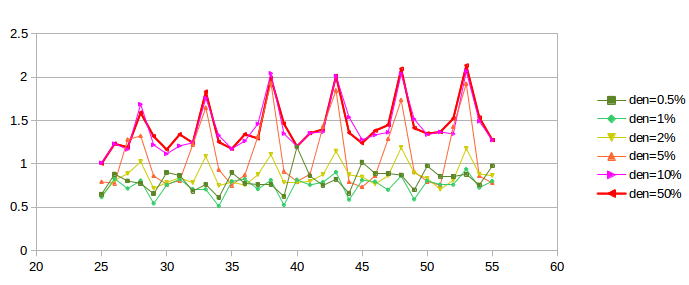

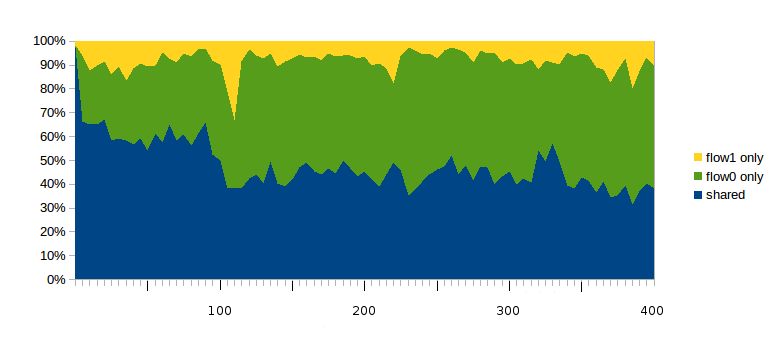

We can visualize phase effects with ns-2 by letting delayB range over, say, 0 to 50 in small increments, and plotting the corresponding values of ratio2 (above). Classically we expect ratio2 to be close to 1.00. In the graph below, the blue curve represents the goodput ratio; it shows a marked (though not perfect) periodicity with period 5 ms.

The orange curve represents (RTT_ratio)2; according to 20.3 TCP Reno Fairness with Synchronized Losses we would expect the blue and orange curves to be about the same. When the blue curve is high, the slower B–D connection is proportionately at an unexpected disadvantage. Seldom do phase effects work in favor of the B–D connection, because A’s phase here is quite small (0.144, based on A’s exact RTTnoLoad of 231.44 ms). (If we change the A–R propagation delay (basedelay) to 12 ms, making A’s phase 0.544, the blue curve oscillates somewhat more evenly both above and below the orange curve, but still with approximately the same amplitude.)

Recall that a 5 ms change in delayB corresponds to a 10 ms change in the A–D connection’s RTT, equal to router R’s transmission time. What is happening here is that as the B–D connection’s RTT increases through a range of 10 ms, it cycles through from phase-effect neutrality to phase-effect deficit and back.

31.3.5 Minimizing Phase Effects¶

In the real world, the kind of precise transmission synchronization that leads to phase effects is seldom evident, though perhaps this has less to do with rarity and more with the fact that head-to-head TCP competitions are difficult to observe intimately. Usually, however, there seems to be sufficient other traffic present to disrupt the synchronization. How can we break this synchronization in simulations? One way or another, we must inject some degree of randomization into the bulk TCP flows.

Techniques introduced in [FJ92] to break synchronization in ns-2 simulations were random “telnet” traffic – involving smaller packets sent according to a given random distribution – and the use of random-drop queues (not included in the standard ns-2 distribution). The second, of course, means we are no longer simulating FIFO queues.

A third way of addressing phase effects is to make use of the ns-2 overhead variable, which introduces some modest randomization in packet-departure times at the TCP sender. Because this technique is simpler, we will start with it. One difference between the use of overhead and telnet traffic is that the latter has the effect of introducing delays at all nodes of the network that carry the traffic, not just at the TCP sources.

31.3.6 Phase Effects and overhead¶

For our first attempt at introducing phase-effect-avoiding randomization in the competing TCP flows, we will start with ns-2’s TCP overhead attribute. This is equal to 0 by default and is measured in units of seconds. If overhead > 0, then the TCP source introduces a uniformly distributed random delay of between 0 and overhead seconds whenever an ACK arrives and the source is allowed to send a new packet. Because the distribution is uniform, the average delay so introduced is thus overhead/2. To introduce an average delay of 10 ms, therefore, one sets overhead = 0.02. Packets are always sent in order; if packet 2 is assigned a small overhead delay and packet 1 a large overhead delay, then packet 2 waits until packet 1 has been sent. For this reason, it is a good idea to keep the average overhead delay no more than the average packet interval (here 10 ms).

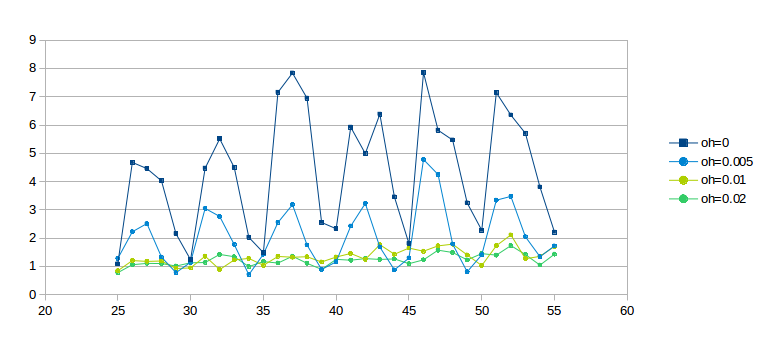

The following graph shows four curves representing overhead values of 0, 0.005, 0.01 and 0.02 (that is, 5 ms, 10 ms and 20 ms). For each curve, ratio1 (not the actual goodput ratio and not ratio2) is plotted as a function of delayB as the latter ranges from 25 to 55 ms. The simulations run for 300 seconds, and bottleneckBW = 0.8. (We will return to the choice of ratio1 here in 31.3.9 The RTT Problem; the corresponding ratio2 graph is however quite similar, at least in terms of oscillatory behavior.)

The dark-blue curve for overhead = 0 is wildly erratic due to phase effects; the light-blue curve for overhead = 0.005 has about half the oscillation. Even the light-green overhead = 0.01 curve exhibits some wiggling; it is not until overhead = 0.02 for the darker green curve that the graph really settles down. We conclude that the latter two values for overhead are quite effective at mitigating phase effects.

One crude way to quantify the degree of graph oscillation is by calculating the mean deviation; the respective deviation values for the curves above are are 1.286, 0.638, 0.136 and 0.090.

Recall that the time to send one packet on the bottleneck link is 0.01 seconds, and that the average delay introduced by overhead d is d/2; thus, when overhead is 0.02 each connection would, if acting alone, have an average sender delay equal to the bottleneck-link delay (though overhead delay is like propagation delay, and so a high overhead will not prevent queue buildup).

Compared to the 10-ms-per-packet R–D transmission time, average delays of 5 and 10 ms per flow (overhead of 0.01 and 0.02 respectively) may not seem disproportionate. They are, however, quite large when compared to the 1.0 ms bandwidth delay on the A–R and B–R legs.

Generally, if the goal is to reduce phase effects then overhead should be comparable to the bottleneck-router transmission rate. Using overhead > 0 does increase the RTT, but in this case not considerably.

We conclude that using overhead to break the synchronization that leads to phase effects appears to have worked, at least in the sense that with the value of overhead = 0.02 the goodput ratio increases more-or-less monotonically with increasing delayB.

The problem with using overhead this way is that it does not correspond to any physical network delay or other phenomenon. Its use here represents a decidedly ad hoc strategy to introduce enough randomization that phase effects disappear. That said, it does seem to produce results similar to those obtained by injecting random “telnet” traffic, introduced in the following section.

31.3.7 Phase Effects and telnet traffic¶

We can also introduce anti-phase-effect randomization by making use of the ns-2 telnet application to generate low-to-moderate levels of random traffic. This requires an additional two Agent/TCP objects, representing A–D and B–D telnet connections, to carry the traffic; this telnet traffic will then introduce slight delays in the corresponding bulk (ftp) traffic. The size of the telnet packets sent is determined by the TCP agents’ usual packetSize_ attribute.

For each telnet connection we create an Application/Telnet object and set its attribute interval_; in the script fragment below this is set to tninterval. This represents the average packet spacing in seconds; transmissions are then scheduled according to an exponential random distribution with interval_ as its mean. We remark that “real” telnet terminal-login traffic seldom if ever follows an exponential random distribution, though this is not necessarily a concern as there are other common traffic patterns (eg web traffic or database traffic) that fit this model better.

Actual (simulated) transmissions, however, are also constrained by the telnet connection’s sliding window. It is quite possible that the telnet application releases a new packet for transmission, but it cannot yet be sent because the telnet TCP connection’s sliding window is momentarily frozen, waiting for the next ACK. If the telnet packets encounter congestion and the interval_ is small enough then the sender may have a backlog of telnet packets in its outbound queue that are waiting for the sliding window to advance enough to permit their departure.

set tcp10 [new Agent/TCP]

$ns attach-agent $A $tcp10

set tcp11 [new Agent/TCP]

$ns attach-agent $B $tcp11

set end10 [new Agent/TCPSink]

set end11 [new Agent/TCPSink]

$ns attach-agent $D $end10

$ns attach-agent $D $end11

set telnet0 [new Application/Telnet]

set telnet1 [new Application/Telnet]

set tninterval 0.001 ;# see text for discussion

$telnet0 set interval_ $tninterval

$tcp10 set packetSize_ 210

$telnet1 set interval_ $tninterval

$tcp11 set packetSize_ 210

$telnet0 attach-agent $tcp10

$telnet1 attach-agent $tcp11

“Real” telnet packets, besides not necessarily being exponentially distributed, are most often quite small. In the simulations here we use an uncharacteristically large size of 210 bytes, leading to a total packet size of 250 bytes after the 40-byte simulated TCP/IP header is attached. We denote the latter number by actualSize. See exercise 9.0.

The bandwidth theoretically consumed by the telnet connection is simply actualSize/$tninterval; the actual bandwidth may be lower if the telnet packets are encountering congestion as noted above. It is convenient to define an attribute tndensity that denotes the fraction of the R–D link’s bandwith that the Telnet application will be allowed to use, eg 2%. In this case we have

$tninterval = actualSize / ($tndensity * $bottleneckBW)

For example, if actualSize = 250 bytes, and $bottleneckBW corresponds to 1000 bytes every 10 ms, then the telnet connection could saturate the link if its packets were spaced 2.5 ms apart. However, if we want the telnet connection to use up to 5% of the bottleneck link, then $tninterval should be 2.5/0.05 = 50 ms (converted for ns-2 to 0.05 sec).

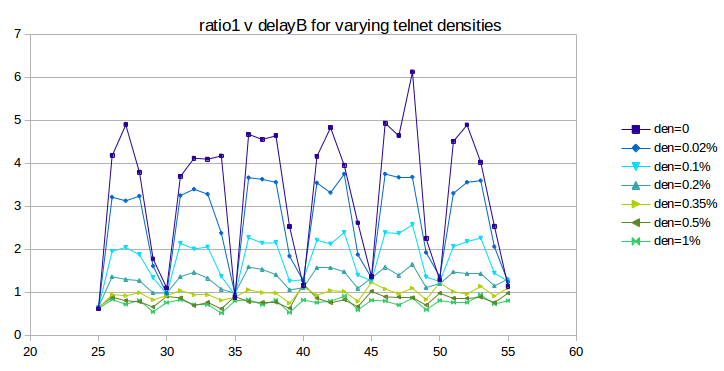

The first question we need to address is whether telnet traffic can sufficiently dampen phase-effect oscillations, and, if so, at what densities. The following graph is the telnet version of that above in 31.3.6 Phase Effects and overhead; bottleneckBW is still 0.8 but the simulations now run for 3000 seconds. The telnet total packet size is 250 bytes. The given telnet density percentages apply to each of the A–D and B–D telnet connections; the total telnet density is thus double the value shown.

As we hoped, the oscillation does indeed substantially flatten out as the telnet density increases from 0 (dark blue) to 1% (bright green); the mean deviation falls from 1.36 to 0.084. So far the use of telnet is very promising.

Unfortunately, if we continue to increase the telnet density past 1%, the oscillation increases again, as can be seen in the next graph:

The first two curves, plotted in green, correspond to the “good” densities of the previous graph, with the vertical axis stretched by a factor of two. As densities increase, however, phase-effect oscillation returns, and the curves converge towards the heavier red curve at the top.

What appears to be happening is that, beyond a density of 1% or so, the limiting factor in telnet transmission becomes the telnet sliding window rather than the random traffic generation, as mentioned in the third paragraph of this section. Once the sliding window becomes the limiting factor on telnet packet transmission, the telnet connections behave much like – and become synchronized with – their corresponding bulk-traffic ftp connections. At that point their ability to moderate phase effects is greatly diminished, as actual packet departures no longer have anything to do with the exponential random distribution that generates the packets.

Despite this last issue, the fact that small levels of random traffic can lead to large reductions in phase effects can be taken as evidence that, in the real world, where other traffic sources are ubiquitous, phase effects will seldom be a problem.

31.3.8 overhead versus telnet¶

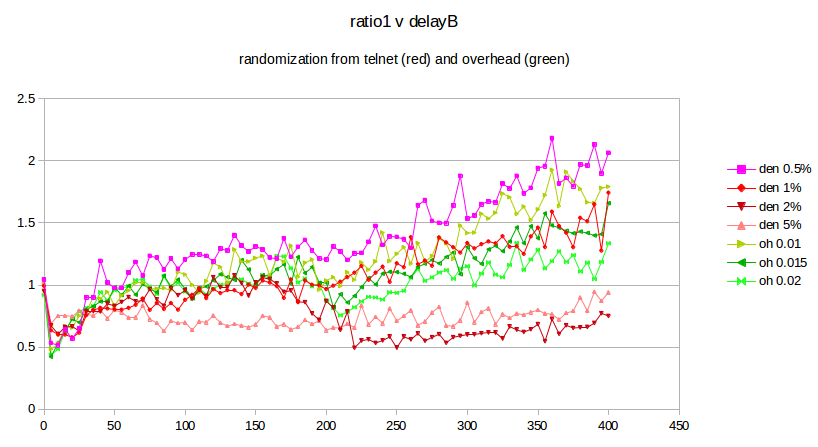

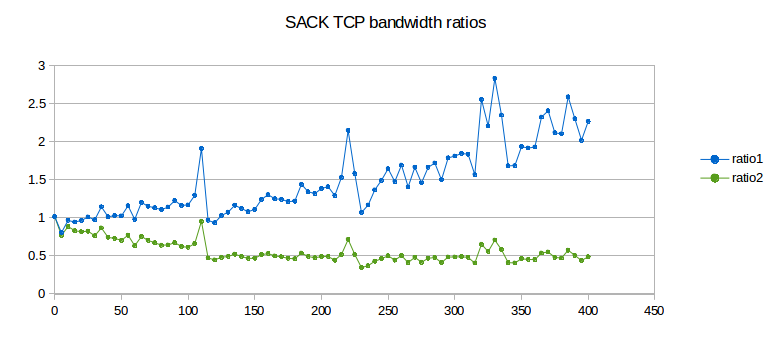

The next step is to compare the effect on the original bulk-traffic flows of overhead randomization and telnet randomization. The graphs below plot ratio1 as a function of delayB as the latter ranges from 0 to 400 in increments of 5. The bottleneckBW is 0.8 Mbps, the queue capacity at R is 20, and the run-time is 3000 seconds.

The four reddish-hued curves represent the result of using telnet with a packet size of 250, at densities ranging from 0.5% to 5%. These may be compared with the three green curves representing the use of overhead, with values 0.01, 0.015 and 0.02. While telnet with a density of 1% is in excellent agreement with the use of overhead, it is also apparent that smaller telnet densities give a larger ratio1 while larger densities give a smaller. This raises the awkward possibility that the exact mechanism by which we introduce randomization may have a material effect on the fairness ratios that we ultimately observe. There is no “right” answer here; different randomization sources or levels may simply lead to differing fairness results.

The advantage of using telnet for randomization is that it represents an actual network phenomenon, unlike overhead. The drawback to using telnet is that the effect on the bulk-traffic goodput ratio is, as the graph above shows, somewhat sensitive to the exact value chosen for the telnet density.

In the remainder of this chapter, we will continue to use the overhead model, for simplicity, though we do not claim this is a universally appropriate approach.

31.3.9 The RTT Problem¶

In all three of the preceding graphs (31.3.6 Phase Effects and overhead, 31.3.7 Phase Effects and telnet traffic and 31.3.8 overhead versus telnet), the green curves on the graphs appear to show that, once sufficient randomization has been introduced to disrupt phase effects, ratio1 converges to 1.0. This, however, is in fact an artifact, due to the second flaw in our simulated network: RTT is not very constant. While RTTnoLoad for the A–D link is about 220 ms, queuing delays at R (with queuesize = 20) can almost double that by adding up to 20 × 10 ms = 200 ms. This means that the computed values for RTTratio are too large, and the computed values for ratio1 are thus too small. While one approach to address this problem is to keep careful track of RTTactual, a simpler strategy is to create a simulated network in which the queuing delay is small compared to the propagation delay and so the RTT is relatively constant. We turn to this next.

31.3.10 Raising the Bandwidth¶

In modern high-bandwidth networks, queuing delays tend to be small compared to the propagation delay; see 19.7 TCP and Bottleneck Link Utilization. To simulate such a network here, we simply increase the bandwidth of all the links tenfold, while leaving the existing propagation delays the same. We achieve this by setting bottleneckBW = 8.0 instead of 0.8. This makes the A–D RTTnoLoad equal to about 221 ms; queuing delays can now amount to at most an additional 20 ms.

The value of overhead also needs to be scaled down by a factor of 10, to 0.002 sec, to reflect an average delay in the same range as the bottleneck-link packet transmission time.

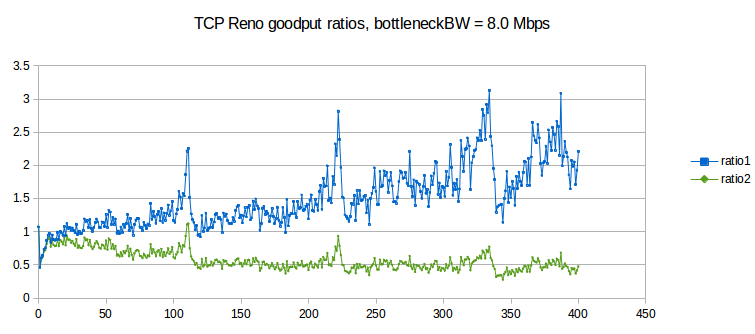

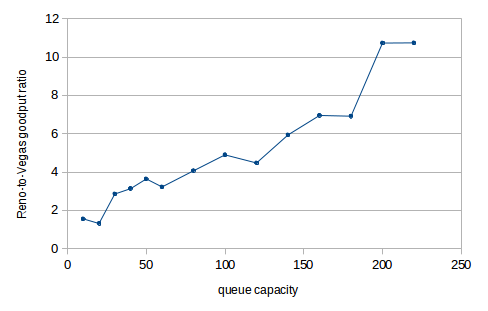

Here is a graph of results for bottleneckBW = 8.0, time = 3000, overhead = 0.002 and queuesize = 20. We still use 220 ms as the estimated RTT, though the actual RTT will range from 221 ms to 241 ms. The delayB parameter runs from 0 to 400 in steps of 1.0.

The first observation to make is that ratio1 is generally too large and ratio2 is generally too small, when compared to 1.0. In other words, neither is an especially good fit. This appears to be a fairly general phenomenon, in both simulation and the real world: TCP Reno throughput ratios tend to be somewhere between the corresponding RTT ratio and the square of the RTT ratio.

The synchronized-loss hypothesis led to the prediction (20.3 TCP Reno Fairness with Synchronized Losses) that the goodput ratio would be close to RTT_ratio2. As this conclusion appears to fail, the hypothesis too must fail, at least to a degree: it must be the case that not all losses are shared.

Throughout the graph we can observe a fair amount of “noise” variation. Most of this variation appears unrelated to the 5 ms period we would expect for phase effects (as in the graph at 31.3.4.2 Two-sender phase effects). However, it is important to increment delayB in amounts much smaller than 5 ms in order to rule this out, hence the increment of 1.0 here.

There are strong peaks at delayB = 110, 220 and 330. These delayB values correspond to increasing the RTT by integral multiples 2, 3 and 4 respectively, and the peaks are presumably related to some kind of higher-level phase effect.

31.3.10.1 Possible models¶

If the synchronized-loss fairness model fails, with what do we replace it? Here are two ad hoc options. First, we can try to fit a curve of the form

goodput_ratio = K×(RTT_ratio)𝛼

to the above data. If we do this, the value for the exponent 𝛼 comes out to about 1.58, sort of a “compromise” between ratio2 (𝛼=2) and ratio1 (𝛼=1), although the value of the exponent here is somewhat sensitive to the details of the simulation.

An entirely different curve to fit to the data, based on the appearance in the graph that ratio2 ≃ 0.5 past 120, is

goodput_ratio = 1/2 × (RTT_ratio)2

We do not, however, possess for either of these formulas a model for the relative losses in the two primary TCP connections that is precise enough to offer an explanation of the formula (though see the final paragraph of 31.4.2.2 Relative loss rates).

31.3.10.2 Higher bandwidth and link utilization¶

One consequence of raising the bottleneck bandwidth is that total link utilization drops, for delayB = 0, to 80% of the bandwidth of the bottleneck link, from 98%; this is in keeping with the analysis of 19.7 TCP and Bottleneck Link Utilization. The transit capacity is 220 packets and another 20 can be in the queue at R; thus an ideal sawtooth would oscillate between 120 and 240 packets. We do have two senders here, but when delayB = 0 most losses are synchronized, meaning the two together behave like one sender with an additive-increase value of 2. As cwnd varies linearly from 120 to 240, it spends 5/6 of the time below the transit capacity of 220 packets – during which period the average cwnd is (120+220)/2 = 170 – and 1/6 of the time with the path 100% utilized; the weighted average estimating total goodput is thus (5/6)×170/220 + (1/6)×1 = 81%.

When delayB = 400, combined TCP Reno goodput falls to about 51% of the bandwidth of the bottleneck link. This low utilization, however, is indeed related to loss and timeouts; the corresponding combined goodput percentage for SACK TCP (which as we shall see in 31.4.2 SACK TCP and Avoiding Loss Anomalies is much better behaved) is 68%.

31.4 TCP Loss Events and Synchronized Losses¶

If the synchronized-loss model is not entirely accurate, as we concluded from the graph above, what does happen with packet losses when the queue fills?

At this point we shift focus from analyzing goodput ratios to analyzing the underlying loss events, using the ns-2 tracefile. We will look at the synchronization of loss events between different connections and at how many individual packet losses may be involved in a single TCP loss response.

One of the conclusions we will reach is that TCP Reno’s response to queue overflows in the face of competition is often quite messy, versus the single-loss behavior in the absence of competition as described above in 31.2.3 Single Losses. If we are trying to come up with a packet-loss model to replace the synchronized-loss hypothesis, it turns out that we would do better to switch to SACK TCP, though use of SACK TCP will not change the goodput ratios much at all. (The fact that Selective ACKs are nearly universal in the real world is another good reason to enable them here.)

Packets are dropped when the queue fills. It may be the case that only a single packet is dropped; it may be the case that multiple packets are dropped from each of multiple connections. We will refer to the set of packets dropped as a drop cluster. After a packet is dropped, TCP Reno discovers this just over one RTT after it was sent, through Fast Retransmit, and then responds by halving cwnd, through Fast Recovery.

TCP Reno retransmits only one lost packet per RTT; TCP NewReno does the same but SACK TCP may retransmit multiple lost packets together. If enough packets were lost from a TCP Reno/NewReno connection, not all of them may be retransmitted by the point the retransmission-timeout timer expires (typically 1-2 seconds), resulting in a coarse timeout. At that point, TCP abandons its Fast-Recovery process, even if it had been progressing steadily.

Eventually the TCP senders that have experienced packet loss reduce their cwnd, and thus the queue utilization drops. At that point we would classically not expect more losses until the senders involved have had time to grow their cwnds through the additive-increase process to the point of again filling the queue. As we shall see in 31.4.1.3 Transient queue peaks, however, this classical expectation is not entirely correct.

It is possible that the packet losses associated with one full-queue period are spread out over sufficient time (more than one RTT, at a minimum) that TCP Reno responds to them separately and halves cwnd more than once in rapid succession. We will refer to this as a loss-response cluster, or sometimes as a tooth cluster.

In the ns-2 simulator, counting individual lost packets and TCP loss responses is straightforward enough. For TCP Reno, there are only two kinds of loss responses: Fast Recovery, in which cwnd is halved, and coarse timeout, in which cwnd is set to 1.

Counting clusters, however, is more subjective; we need to decide when two drops or responses are close enough to one another that they should be counted as part of the same cluster. We use the notion of granularity here: two or more losses separated by less time than the granularity time interval are counted as a single event. We can also use granularity to decide when two loss responses in different connections are to be considered parts of the same event, or to tie loss responses to packet losses.

The appropriate length of the granularity interval is not as clear-cut as might be hoped. In some cases a couple RTTs may be sufficient, but note that the RTTnoLoad of the B–D connection above ranges from 0.22 sec to over 1.0 sec as delayB increases from 0 to 400 ms. In order to cover coarse timeouts, a granularity of from two to three seconds often seems to work well for packet drops.

If we are trying to count losses to estimate the loss rate as in the formula cwnd = 1.225/√p as in 21.2 TCP Reno loss rate versus cwnd, then we should count every loss response separately; the argument in 21.2 TCP Reno loss rate versus cwnd depended on counting all loss responses. The difference between one fast-recovery response and two in rapid succession is that in the latter case cwnd is halved twice, to about a quarter of its original value.

However, if we are interested in whether or not losses between two connections are synchronized, we need again to make use of granularity to make sure two “close” losses are counted as one. In this setting, a granularity of one to two seconds is often sufficient.

31.4.1 Some TCP Reno cwnd graphs¶

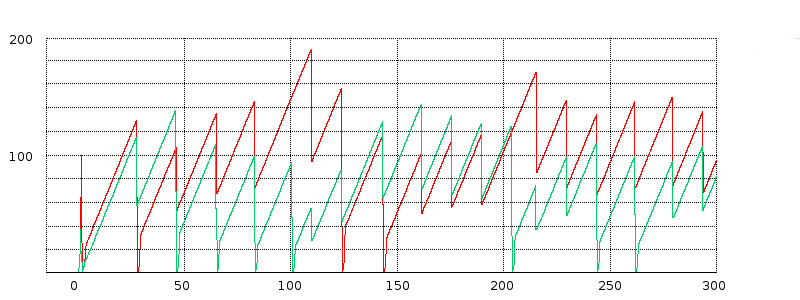

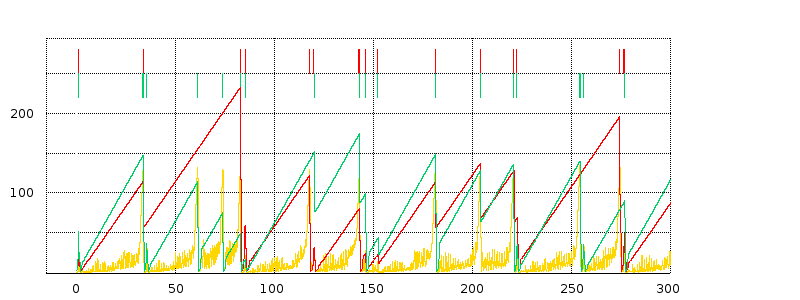

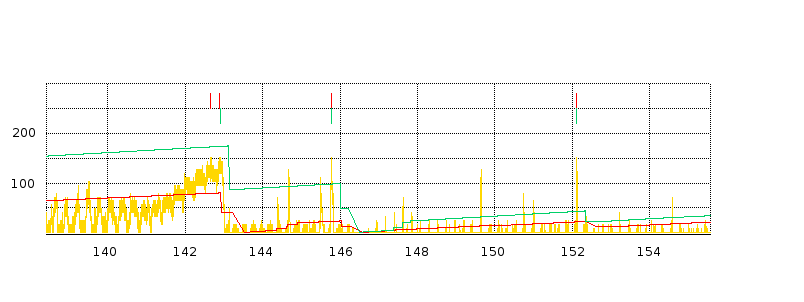

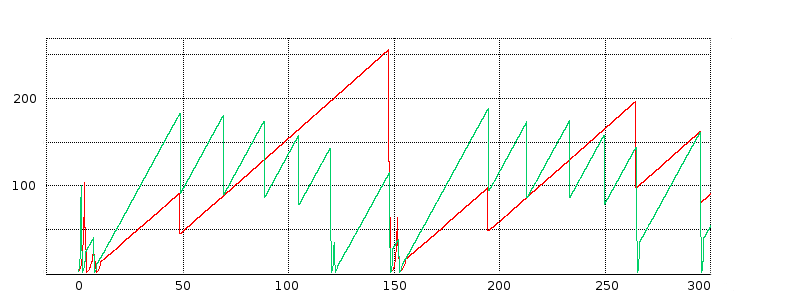

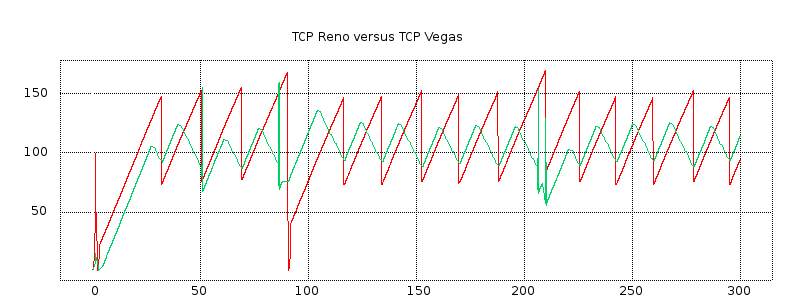

We next turn to some examples of actual TCP behavior, and present a few hopefully representative cwnd graphs. In each figure, the red line represents the longer-path (B–D) flow and the green line represents the shorter (A–D) flow. The graphs are of our usual simulation with bottleneckBW = 8.0 and overhead = 0.002, and run for 300 seconds.

While the immediate goal is to illuminate some of the above loss-clustering issues above, the graphs serve as well to illustrate the general behavior of TCP Reno competition and its variability. We will also use one of the graphs to explore the idea of transient queue spikes.